SOMI Technology Consulting Program with Vantari VR

It's my pleasure to be part of the first Technology Consulting Program (TCP) run by SOMI: Society of Medical Innovation which ran for 6 weeks. Over that time I learned,

- basic architecture of Unity and Unreal game engines

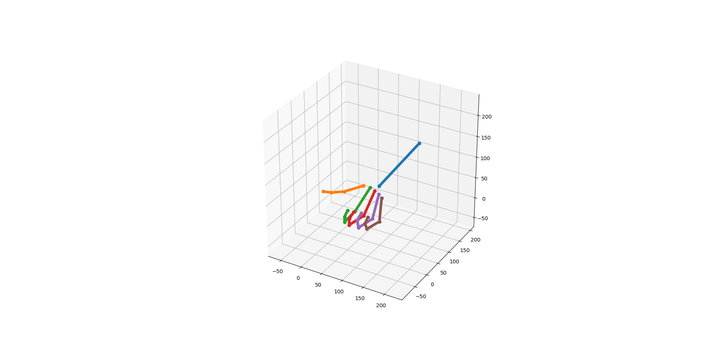

- worked with Leap Motion controller to capture hand poses

- implemented code (built on top of open source libraries, e.g., scikit) to calculate hand pose joint angles and position for feature extraction to train our machine learning model

- trained a simple machine learning model for basic gesture recognition (done with Minh using scikit-learn)

- and to tie everything together, implemented a Unity script that takes in hand pose from Leap motion controller and passes it to a python script that does the feature extraction and gesture recognition.

Special thanks to the following awesome people as well

- Dr Vijay Paul and Dr Nishanth Krishnananthan for their guidance specially during the Shark Tank. Their comments and advice gave me good insight into the VR medical training problem, and start up environment;

- Montgomery Guilhaus for the technical guidance (telling us what not to do) that enabled us to do a lot within 6 weeks (less actually).

- To my fellow TCP consultants: Akshin Goswami (the hustler, designer, Unity dev, and in my opinion the MVP), Supritha (Sup) Uday (business researcher), and Duy Minh Nguyen (machine learning programmer), for making the journey productive!

Disclaimers:

- With only 6 weeks to work on the project (i.e., 2-3 week part time development work), the final output is simply a proof of concept. There are tons of things that needs to be addressed for it to be actually usable in real life.

- Apologies if this post is a list of technical things done and no big picture explanation was given. Wouldn't want to risk breaking the NDA!